Compare commits

205 commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

ebcc6e5086 | ||

|

|

c0766f6abc | ||

|

|

7bc90c425f | ||

|

|

f2c494d4d6 | ||

|

|

8147bbf3b9 | ||

|

|

adbeeaf203 | ||

|

|

f55ed56215 | ||

|

|

d1d33c4bce | ||

|

|

b7de08ba0b | ||

|

|

405cef8d28 | ||

|

|

21262d2e43 | ||

|

|

75d64f9dc6 | ||

|

|

929448d64b | ||

|

|

134aad29f1 | ||

|

|

dcd4d7a7a8 | ||

|

|

e0c26c0952 | ||

|

|

9fa2d0b6ca | ||

|

|

0877e34289 | ||

|

|

92c3d3840d | ||

|

|

98017e9a76 | ||

|

|

6932c38854 | ||

|

|

ecdb5ef859 | ||

|

|

81c44f5f4e | ||

|

|

59c07e315a | ||

|

|

07f8ec41a1 | ||

|

|

01e52e795a | ||

|

|

98ec0f5e0c | ||

|

|

eb3d7a183a | ||

|

|

93aebedd7d | ||

|

|

616daec0d9 | ||

|

|

745462d3ae | ||

|

|

0634c1e05f | ||

|

|

b230b693f8 | ||

|

|

97a0d00bfd | ||

|

|

f2f0f426aa | ||

|

|

7be5905b46 | ||

|

|

7c9e91f91a | ||

|

|

586e9a613b | ||

|

|

e61efcd00d | ||

|

|

9311d924f7 | ||

|

|

8947d3cb19 | ||

|

|

2150363108 | ||

|

|

6a9a2ad40f | ||

|

|

55eb8818ea | ||

|

|

a48564e3b2 | ||

|

|

606e9bb0d6 | ||

|

|

abc5184e19 | ||

|

|

99ddd208db | ||

|

|

fc234b12e5 | ||

|

|

04d747ff99 | ||

|

|

4586b344ce | ||

|

|

9b17822229 | ||

|

|

0f33f2bfef | ||

|

|

bc4e3aa7fb | ||

|

|

e60bb35ebd | ||

|

|

024aceda53 | ||

|

|

6155700a68 | ||

|

|

9b3618f73e | ||

|

|

911f483ce3 | ||

|

|

09e1aba567 | ||

|

|

66c63f0511 | ||

|

|

115fd217e8 | ||

|

|

27520c835e | ||

|

|

c686187e35 | ||

|

|

ee02a80a98 | ||

|

|

bb6166c62d | ||

|

|

08f25a7c50 | ||

|

|

292ad2b9b6 | ||

|

|

2627f02a55 | ||

|

|

18e0571e81 | ||

|

|

807e6151f2 | ||

|

|

8a1f0f7f33 | ||

|

|

3cf620ed8e | ||

|

|

75d53c3c6f | ||

|

|

59043a9add | ||

|

|

f7eca425eb | ||

|

|

84b978278e | ||

|

|

579f95f9fc | ||

|

|

99e1006cb5 | ||

|

|

4958097b66 | ||

|

|

cd9b91e1d9 | ||

|

|

fc38bda03c | ||

|

|

c53d2b6f5a | ||

|

|

570bda9c8b | ||

|

|

34c5ab2e56 | ||

|

|

4bc2bf79eb | ||

|

|

db3294e6e0 | ||

|

|

93850d72eb | ||

|

|

c6cc3cbd26 | ||

|

|

23599ee1b2 | ||

|

|

42584ca60a | ||

|

|

d57fc7eab9 | ||

|

|

4845b92248 | ||

|

|

9ad09c7c6d | ||

|

|

35483fa0b1 | ||

|

|

bffd1b1394 | ||

|

|

34e3f9ecee | ||

|

|

9df8f9c651 | ||

|

|

0918299163 | ||

|

|

a46343c84f | ||

|

|

eb87474b48 | ||

|

|

fc9b0af5b6 | ||

|

|

a41abc870d | ||

|

|

78e9d7b50b | ||

|

|

a10beac943 | ||

|

|

9e3963ba23 | ||

|

|

3b2b8f814c | ||

|

|

2363865e00 | ||

|

|

903a44d991 | ||

|

|

6b46f0488d | ||

|

|

c3703fd13f | ||

|

|

79b84d89a3 | ||

|

|

ac01a17214 | ||

|

|

a86388f6de | ||

|

|

4b90097997 | ||

|

|

0094237b97 | ||

|

|

028143ec7e | ||

|

|

ea7b55f1f0 | ||

|

|

b069e3d824 | ||

|

|

2038877e4e | ||

|

|

6c0f901d33 | ||

|

|

1a5fd214b9 | ||

|

|

5512a841e1 | ||

|

|

983955f5d0 | ||

|

|

23ac3fcd89 | ||

|

|

9dbd8cab4b | ||

|

|

6fd718f353 | ||

|

|

09ea58c062 | ||

|

|

fbe68d516c | ||

|

|

4187afd165 | ||

|

|

5d44018018 | ||

|

|

287de0807c | ||

|

|

e9be86229d | ||

|

|

237b78ee63 | ||

|

|

f1d51eae7b | ||

|

|

709ea1ebcb | ||

|

|

9f2e329d99 | ||

|

|

76dd9c392b | ||

|

|

78bd2da267 | ||

|

|

0901f67d89 | ||

|

|

66278443c4 | ||

|

|

c11aba7aa4 | ||

|

|

c43ec575ae | ||

|

|

a7e6bcb366 | ||

|

|

fb98a4d7d0 | ||

|

|

75e9123eaf | ||

|

|

7263ec553e | ||

|

|

4466bb1451 | ||

|

|

e9f2b1efea | ||

|

|

cb1ed3beb1 | ||

|

|

e5713dc63c | ||

|

|

9a6f2a6d96 | ||

|

|

844bdbdf60 | ||

|

|

8d125f8d44 | ||

|

|

36c1471dcf | ||

|

|

9e9c778edd | ||

|

|

87c8457144 | ||

|

|

b873f75ff6 | ||

|

|

706971edbe | ||

|

|

f8022c9c9a | ||

|

|

4f7d2af1fa | ||

|

|

a919a3a519 | ||

|

|

37fc334c46 | ||

|

|

30e295ec28 | ||

|

|

301a0ca66d | ||

|

|

060e423707 | ||

|

|

0da235bceb | ||

|

|

27e09d7aa7 | ||

|

|

0eeab397cf | ||

|

|

3b7850802a | ||

|

|

14b14686f4 | ||

|

|

830ee294ef | ||

|

|

25a8c6b558 | ||

|

|

f747637688 | ||

|

|

bf1667b44d | ||

|

|

51a753c4d2 | ||

|

|

32c21a26a9 | ||

|

|

708c45504a | ||

|

|

460a06ec04 | ||

|

|

f91d2be91e | ||

|

|

d244136efd | ||

|

|

e4ac106b98 | ||

|

|

869fc1698c | ||

|

|

02922845dd | ||

|

|

c5f18a4166 | ||

|

|

195bc7c69d | ||

|

|

19ac0e83ad | ||

|

|

d6d758c5c1 | ||

|

|

9fa232e3a1 | ||

|

|

a00b11822a | ||

|

|

5d0868704b | ||

|

|

bda9561178 | ||

|

|

6f22767486 | ||

|

|

b230a13761 | ||

|

|

53206a0861 | ||

|

|

10baf47c02 | ||

|

|

7f277dda2f | ||

|

|

5a0af081e6 | ||

|

|

a1e5d22570 | ||

|

|

ca39d38dda | ||

|

|

9efddcdbf9 | ||

|

|

95495aa786 | ||

|

|

9525c86a78 | ||

|

|

a9a4f87628 | ||

|

|

4e8c8d4054 |

120 changed files with 6656 additions and 3680 deletions

77

.github/workflows/docker-image.yml

vendored

77

.github/workflows/docker-image.yml

vendored

|

|

@ -1,16 +1,79 @@

|

|||

name: Docker Image CI

|

||||

name: Publish Docker Image

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ master ]

|

||||

branches:

|

||||

- 'master'

|

||||

- 'development'

|

||||

tags:

|

||||

- '*'

|

||||

|

||||

env:

|

||||

# github.repository as <account>/<repo>

|

||||

IMAGE_NAME: lbry/hub

|

||||

|

||||

jobs:

|

||||

login:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

contents: read

|

||||

packages: write

|

||||

# This is used to complete the identity challenge

|

||||

# with sigstore/fulcio when running outside of PRs.

|

||||

id-token: write

|

||||

|

||||

steps:

|

||||

-

|

||||

name: Login to Docker Hub

|

||||

uses: docker/login-action@v1

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v3

|

||||

|

||||

# # Install the cosign tool except on PR

|

||||

# # https://github.com/sigstore/cosign-installer

|

||||

# - name: Install cosign

|

||||

# if: github.event_name != 'pull_request'

|

||||

# uses: sigstore/cosign-installer@d6a3abf1bdea83574e28d40543793018b6035605

|

||||

# with:

|

||||

# cosign-release: 'v1.7.1'

|

||||

|

||||

# Workaround: https://github.com/docker/build-push-action/issues/461

|

||||

- name: Setup Docker buildx

|

||||

uses: docker/setup-buildx-action@v2

|

||||

|

||||

# Login against a Docker registry except on PR

|

||||

# https://github.com/docker/login-action

|

||||

- name: Log into registry ${{ env.REGISTRY }}

|

||||

if: github.event_name != 'pull_request'

|

||||

uses: docker/login-action@v2

|

||||

with:

|

||||

username: ${{ secrets.DOCKERHUB_USERNAME }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

password: ${{ secrets.DOCKERHUB_TOKEN }}

|

||||

|

||||

# Extract metadata (tags, labels) for Docker

|

||||

# https://github.com/docker/metadata-action

|

||||

- name: Extract Docker metadata

|

||||

id: meta

|

||||

uses: docker/metadata-action@v2

|

||||

with:

|

||||

images: ${{ env.IMAGE_NAME }}

|

||||

|

||||

# Build and push Docker image with Buildx (don't push on PR)

|

||||

# https://github.com/docker/build-push-action

|

||||

- name: Build and push Docker image

|

||||

id: build-and-push

|

||||

uses: docker/build-push-action@v3

|

||||

with:

|

||||

context: .

|

||||

push: ${{ github.event_name != 'pull_request' }}

|

||||

tags: ${{ env.IMAGE_NAME }}:${{ github.ref_name }}

|

||||

|

||||

# # Sign the resulting Docker image digest except on PRs.

|

||||

# # This will only write to the public Rekor transparency log when the Docker

|

||||

# # repository is public to avoid leaking data. If you would like to publish

|

||||

# # transparency data even for private images, pass --force to cosign below.

|

||||

# # https://github.com/sigstore/cosign

|

||||

# - name: Sign the published Docker image

|

||||

# if: ${{ github.event_name != 'pull_request' }}

|

||||

# env:

|

||||

# COSIGN_EXPERIMENTAL: "true"

|

||||

# # This step uses the identity token to provision an ephemeral certificate

|

||||

# # against the sigstore community Fulcio instance.

|

||||

# run: cosign sign ${{ steps.meta.outputs.tags }}@${{ steps.build-and-push.outputs.digest }}

|

||||

|

|

|

|||

|

|

@ -35,22 +35,12 @@ USER $user

|

|||

WORKDIR $projects_dir

|

||||

RUN python3.9 -m pip install pip

|

||||

RUN python3.9 -m pip install -e .

|

||||

RUN python3.9 docker/set_build.py

|

||||

RUN python3.9 scripts/set_build.py

|

||||

RUN rm ~/.cache -rf

|

||||

|

||||

# entry point

|

||||

ARG host=localhost

|

||||

ARG tcp_port=50001

|

||||

ARG daemon_url=http://lbry:lbry@localhost:9245/

|

||||

VOLUME $db_dir

|

||||

ENV TCP_PORT=$tcp_port

|

||||

ENV HOST=$host

|

||||

ENV DAEMON_URL=$daemon_url

|

||||

ENV DB_DIRECTORY=$db_dir

|

||||

ENV MAX_SESSIONS=100000

|

||||

ENV MAX_SEND=1000000000000000000

|

||||

ENV MAX_RECEIVE=1000000000000000000

|

||||

|

||||

|

||||

COPY ./docker/scribe_entrypoint.sh /entrypoint.sh

|

||||

COPY ./scripts/entrypoint.sh /entrypoint.sh

|

||||

ENTRYPOINT ["/entrypoint.sh"]

|

||||

95

README.md

95

README.md

|

|

@ -1,69 +1,110 @@

|

|||

## Scribe

|

||||

## LBRY Hub

|

||||

|

||||

Scribe is a python library for building services that use the processed data from the [LBRY blockchain](https://github.com/lbryio/lbrycrd) in an ongoing manner. Scribe contains a set of three core executable services that are used together:

|

||||

* `scribe` ([scribe.blockchain.service](https://github.com/lbryio/scribe/tree/master/scribe/blockchain/service.py)) - maintains a [rocksdb](https://github.com/lbryio/lbry-rocksdb) database containing the LBRY blockchain.

|

||||

* `scribe-hub` ([scribe.hub.service](https://github.com/lbryio/scribe/tree/master/scribe/hub/service.py)) - an electrum server for thin-wallet clients (such as [lbry-sdk](https://github.com/lbryio/lbry-sdk)), provides an api for clients to use thin simple-payment-verification (spv) wallets and to resolve and search claims published to the LBRY blockchain.

|

||||

* `scribe-elastic-sync` ([scribe.elasticsearch.service](https://github.com/lbryio/scribe/tree/master/scribe/elasticsearch/service.py)) - a utility to maintain an elasticsearch database of metadata for claims in the LBRY blockchain

|

||||

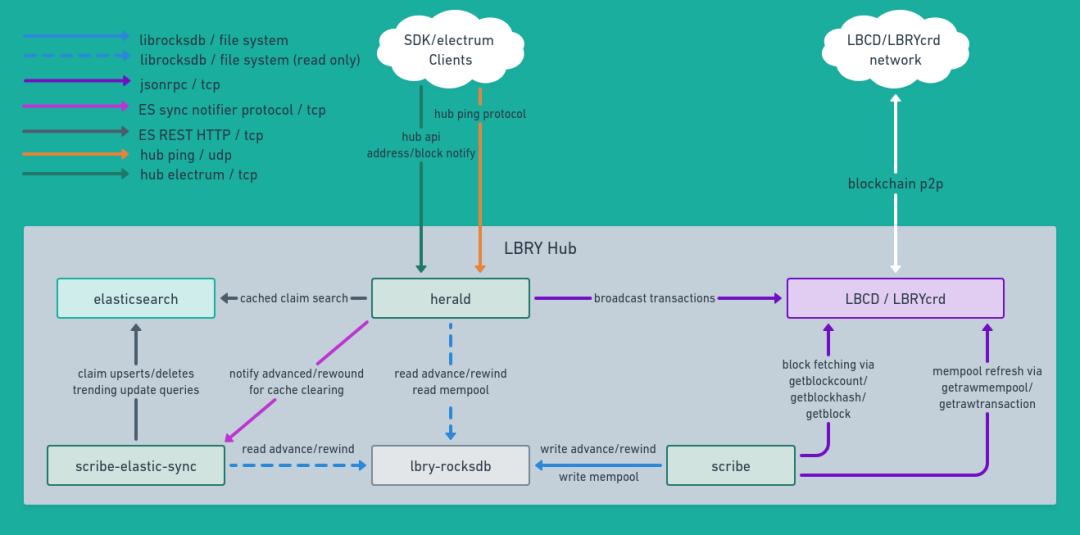

This repo provides a python library, `hub`, for building services that use the processed data from the [LBRY blockchain](https://github.com/lbryio/lbrycrd) in an ongoing manner. Hub contains a set of three core executable services that are used together:

|

||||

* `scribe` ([hub.scribe.service](https://github.com/lbryio/hub/tree/master/hub/service.py)) - maintains a [rocksdb](https://github.com/lbryio/lbry-rocksdb) database containing the LBRY blockchain.

|

||||

* `herald` ([hub.herald.service](https://github.com/lbryio/hub/tree/master/hub/herald/service.py)) - an electrum server for thin-wallet clients (such as [lbry-sdk](https://github.com/lbryio/lbry-sdk)), provides an api for clients to use thin simple-payment-verification (spv) wallets and to resolve and search claims published to the LBRY blockchain. A drop in replacement port of herald written in go - [herald.go](https://github.com/lbryio/herald.go) is currently being worked on.

|

||||

* `scribe-elastic-sync` ([hub.elastic_sync.service](https://github.com/lbryio/hub/tree/master/hub/elastic_sync/service.py)) - a utility to maintain an elasticsearch database of metadata for claims in the LBRY blockchain

|

||||

|

||||

Features and overview of scribe as a python library:

|

||||

|

||||

|

||||

Features and overview of `hub` as a python library:

|

||||

* Uses Python 3.7-3.9 (3.10 probably works but hasn't yet been tested)

|

||||

* An interface developers may implement in order to build their own applications able to receive up-to-date blockchain data in an ongoing manner ([scribe.service.BlockchainReaderService](https://github.com/lbryio/scribe/tree/master/scribe/service.py))

|

||||

* Protobuf schema for encoding and decoding metadata stored on the blockchain ([scribe.schema](https://github.com/lbryio/scribe/tree/master/scribe/schema))

|

||||

* [Rocksdb 6.25.3](https://github.com/lbryio/lbry-rocksdb/) based database containing the blockchain data ([scribe.db](https://github.com/lbryio/scribe/tree/master/scribe/db))

|

||||

* [A community driven performant trending algorithm](https://raw.githubusercontent.com/lbryio/scribe/master/scribe/elasticsearch/trending%20algorithm.pdf) for searching claims ([code](https://github.com/lbryio/scribe/blob/master/scribe/elasticsearch/fast_ar_trending.py))

|

||||

* An interface developers may implement in order to build their own applications able to receive up-to-date blockchain data in an ongoing manner ([hub.service.BlockchainReaderService](https://github.com/lbryio/hub/tree/master/hub/service.py))

|

||||

* Protobuf schema for encoding and decoding metadata stored on the blockchain ([hub.schema](https://github.com/lbryio/hub/tree/master/hub/schema))

|

||||

* [Rocksdb 6.25.3](https://github.com/lbryio/lbry-rocksdb/) based database containing the blockchain data ([hub.db](https://github.com/lbryio/hub/tree/master/hub/db))

|

||||

* [A community driven performant trending algorithm](https://raw.githubusercontent.com/lbryio/hub/master/docs/trending%20algorithm.pdf) for searching claims ([code](https://github.com/lbryio/hub/blob/master/hub/elastic_sync/fast_ar_trending.py))

|

||||

|

||||

## Installation

|

||||

|

||||

Scribe may be run from source, a binary, or a docker image.

|

||||

Our [releases page](https://github.com/lbryio/scribe/releases) contains pre-built binaries of the latest release, pre-releases, and past releases for macOS and Debian-based Linux.

|

||||

Prebuilt [docker images](https://hub.docker.com/r/lbry/scribe/latest-release) are also available.

|

||||

Our [releases page](https://github.com/lbryio/hub/releases) contains pre-built binaries of the latest release, pre-releases, and past releases for macOS and Debian-based Linux.

|

||||

Prebuilt [docker images](https://hub.docker.com/r/lbry/hub/tags) are also available.

|

||||

|

||||

### Prebuilt docker image

|

||||

|

||||

`docker pull lbry/scribe:latest-release`

|

||||

`docker pull lbry/hub:master`

|

||||

|

||||

### Build your own docker image

|

||||

|

||||

```

|

||||

git clone https://github.com/lbryio/scribe.git

|

||||

cd scribe

|

||||

docker build -f ./docker/Dockerfile.scribe -t lbry/scribe:development .

|

||||

git clone https://github.com/lbryio/hub.git

|

||||

cd hub

|

||||

docker build -t lbry/hub:development .

|

||||

```

|

||||

|

||||

### Install from source

|

||||

|

||||

Scribe has been tested with python 3.7-3.9. Higher versions probably work but have not yet been tested.

|

||||

|

||||

1. clone the scribe scribe

|

||||

1. clone the scribe repo

|

||||

```

|

||||

git clone https://github.com/lbryio/scribe.git

|

||||

cd scribe

|

||||

git clone https://github.com/lbryio/hub.git

|

||||

cd hub

|

||||

```

|

||||

2. make a virtual env

|

||||

```

|

||||

python3.9 -m venv scribe-venv

|

||||

python3.9 -m venv hub-venv

|

||||

```

|

||||

3. from the virtual env, install scribe

|

||||

```

|

||||

source scribe-venv/bin/activate

|

||||

source hub-venv/bin/activate

|

||||

pip install -e .

|

||||

```

|

||||

|

||||

That completes the installation, now you should have the commands `scribe`, `scribe-elastic-sync` and `herald`

|

||||

|

||||

These can also optionally be run with `python -m hub.scribe`, `python -m hub.elastic_sync`, and `python -m hub.herald`

|

||||

|

||||

## Usage

|

||||

|

||||

Scribe needs either the [lbrycrd](https://github.com/lbryio/lbrycrd) or [lbcd](https://github.com/lbryio/lbcd) blockchain daemon to be running.

|

||||

### Requirements

|

||||

|

||||

As of block 1124663 (3/10/22) the size of the rocksdb database is 87GB and the size of the elasticsearch volume is 49GB.

|

||||

Scribe needs elasticsearch and either the [lbrycrd](https://github.com/lbryio/lbrycrd) or [lbcd](https://github.com/lbryio/lbcd) blockchain daemon to be running.

|

||||

|

||||

With options for high performance, if you have 64gb of memory and 12 cores, everything can be run on the same machine. However, the recommended way is with elasticsearch on one instance with 8gb of memory and at least 4 cores dedicated to it and the blockchain daemon on another with 16gb of memory and at least 4 cores. Then the scribe hub services can be run their own instance with between 16 and 32gb of memory (depending on settings) and 8 cores.

|

||||

|

||||

As of block 1147423 (4/21/22) the size of the scribe rocksdb database is 120GB and the size of the elasticsearch volume is 63GB.

|

||||

|

||||

### docker-compose

|

||||

The recommended way to run a scribe hub is with docker. See [this guide](https://github.com/lbryio/hub/blob/master/docs/cluster_guide.md) for instructions.

|

||||

|

||||

If you have the resources to run all of the services on one machine (at least 300gb of fast storage, preferably nvme, 64gb of RAM, 12 fast cores), see [this](https://github.com/lbryio/hub/blob/master/docs/docker_examples/docker-compose.yml) docker-compose example.

|

||||

|

||||

### From source

|

||||

|

||||

To start scribe, run the following (providing your own args)

|

||||

### Options

|

||||

|

||||

```

|

||||

scribe --db_dir /your/db/path --daemon_url rpcuser:rpcpass@localhost:9245

|

||||

```

|

||||

#### Content blocking and filtering

|

||||

|

||||

For various reasons it may be desirable to block or filtering content from claim search and resolve results, [here](https://github.com/lbryio/hub/blob/master/docs/blocking.md) are instructions for how to configure and use this feature as well as information about the recommended defaults.

|

||||

|

||||

#### Common options across `scribe`, `herald`, and `scribe-elastic-sync`:

|

||||

- `--db_dir` (required) Path of the directory containing lbry-rocksdb, set from the environment with `DB_DIRECTORY`

|

||||

- `--daemon_url` (required for `scribe` and `herald`) URL for rpc from lbrycrd or lbcd<rpcuser>:<rpcpassword>@<lbrycrd rpc ip><lbrycrd rpc port>.

|

||||

- `--reorg_limit` Max reorg depth, defaults to 200, set from the environment with `REORG_LIMIT`.

|

||||

- `--chain` With blockchain to use - either `mainnet`, `testnet`, or `regtest` - set from the environment with `NET`

|

||||

- `--max_query_workers` Size of the thread pool, set from the environment with `MAX_QUERY_WORKERS`

|

||||

- `--cache_all_tx_hashes` If this flag is set, all tx hashes will be stored in memory. For `scribe`, this speeds up the rate it can apply blocks as well as process mempool. For `herald`, this will speed up syncing address histories. This setting will use 10+g of memory. It can be set from the environment with `CACHE_ALL_TX_HASHES=Yes`

|

||||

- `--cache_all_claim_txos` If this flag is set, all claim txos will be indexed in memory. Set from the environment with `CACHE_ALL_CLAIM_TXOS=Yes`

|

||||

- `--prometheus_port` If provided this port will be used to provide prometheus metrics, set from the environment with `PROMETHEUS_PORT`

|

||||

|

||||

#### Options for `scribe`

|

||||

- `--db_max_open_files` This setting translates into the max_open_files option given to rocksdb. A higher number will use more memory. Defaults to 64.

|

||||

- `--address_history_cache_size` The count of items in the address history cache used for processing blocks and mempool updates. A higher number will use more memory, shouldn't ever need to be higher than 10000. Defaults to 1000.

|

||||

- `--index_address_statuses` Maintain an index of the statuses of address transaction histories, this makes handling notifications for transactions in a block uniformly fast at the expense of more time to process new blocks and somewhat more disk space (~10gb as of block 1161417).

|

||||

|

||||

#### Options for `scribe-elastic-sync`

|

||||

- `--reindex` If this flag is set drop and rebuild the elasticsearch index.

|

||||

|

||||

#### Options for `herald`

|

||||

- `--host` Interface for server to listen on, use 0.0.0.0 to listen on the external interface. Can be set from the environment with `HOST`

|

||||

- `--tcp_port` Electrum TCP port to listen on for hub server. Can be set from the environment with `TCP_PORT`

|

||||

- `--udp_port` UDP port to listen on for hub server. Can be set from the environment with `UDP_PORT`

|

||||

- `--elastic_services` Comma separated list of items in the format `elastic_host:elastic_port/notifier_host:notifier_port`. Can be set from the environment with `ELASTIC_SERVICES`

|

||||

- `--query_timeout_ms` Timeout for claim searches in elasticsearch in milliseconds. Can be set from the environment with `QUERY_TIMEOUT_MS`

|

||||

- `--blocking_channel_ids` Space separated list of channel claim ids used for blocking. Claims that are reposted by these channels can't be resolved or returned in search results. Can be set from the environment with `BLOCKING_CHANNEL_IDS`.

|

||||

- `--filtering_channel_ids` Space separated list of channel claim ids used for blocking. Claims that are reposted by these channels aren't returned in search results. Can be set from the environment with `FILTERING_CHANNEL_IDS`

|

||||

- `--index_address_statuses` Use the address history status index, this makes handling notifications for transactions in a block uniformly fast (must be turned on in `scribe` too).

|

||||

|

||||

## Contributing

|

||||

|

||||

|

|

|

|||

BIN

diagram.png

BIN

diagram.png

Binary file not shown.

|

Before Width: | Height: | Size: 142 KiB |

|

|

@ -1,80 +0,0 @@

|

|||

version: "3"

|

||||

|

||||

volumes:

|

||||

lbry_rocksdb:

|

||||

es01:

|

||||

|

||||

services:

|

||||

scribe:

|

||||

depends_on:

|

||||

- scribe_elastic_sync

|

||||

image: lbry/scribe:${SCRIBE_TAG:-latest-release}

|

||||

restart: always

|

||||

network_mode: host

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=scribe

|

||||

- DAEMON_URL=http://lbry:lbry@127.0.0.1:9245

|

||||

- MAX_QUERY_WORKERS=2

|

||||

- FILTERING_CHANNEL_IDS=770bd7ecba84fd2f7607fb15aedd2b172c2e153f 95e5db68a3101df19763f3a5182e4b12ba393ee8

|

||||

- BLOCKING_CHANNEL_IDS=dd687b357950f6f271999971f43c785e8067c3a9 06871aa438032244202840ec59a469b303257cad b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6

|

||||

scribe_elastic_sync:

|

||||

depends_on:

|

||||

- es01

|

||||

image: lbry/scribe:${SCRIBE_TAG:-latest-release}

|

||||

restart: always

|

||||

network_mode: host

|

||||

ports:

|

||||

- "127.0.0.1:19080:19080" # elastic notifier port

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=scribe-elastic-sync

|

||||

- MAX_QUERY_WORKERS=2

|

||||

- ELASTIC_HOST=127.0.0.1

|

||||

- ELASTIC_PORT=9200

|

||||

- FILTERING_CHANNEL_IDS=770bd7ecba84fd2f7607fb15aedd2b172c2e153f 95e5db68a3101df19763f3a5182e4b12ba393ee8

|

||||

- BLOCKING_CHANNEL_IDS=dd687b357950f6f271999971f43c785e8067c3a9 06871aa438032244202840ec59a469b303257cad b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6

|

||||

scribe_hub:

|

||||

depends_on:

|

||||

- scribe_elastic_sync

|

||||

- scribe

|

||||

image: lbry/scribe:${SCRIBE_TAG:-latest-release}

|

||||

restart: always

|

||||

network_mode: host

|

||||

ports:

|

||||

- "50001:50001" # electrum rpc port and udp ping port

|

||||

- "2112:2112" # comment out to disable prometheus

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=scribe-hub

|

||||

- DAEMON_URL=http://lbry:lbry@127.0.0.1:9245 # used for broadcasting transactions

|

||||

- MAX_QUERY_WORKERS=4 # reader threads

|

||||

- MAX_SESSIONS=100000

|

||||

- ELASTIC_HOST=127.0.0.1

|

||||

- ELASTIC_PORT=9200

|

||||

- HOST=0.0.0.0

|

||||

- PROMETHEUS_PORT=2112

|

||||

- TCP_PORT=50001

|

||||

- ALLOW_LAN_UDP=No

|

||||

- FILTERING_CHANNEL_IDS=770bd7ecba84fd2f7607fb15aedd2b172c2e153f 95e5db68a3101df19763f3a5182e4b12ba393ee8

|

||||

- BLOCKING_CHANNEL_IDS=dd687b357950f6f271999971f43c785e8067c3a9 06871aa438032244202840ec59a469b303257cad b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6

|

||||

es01:

|

||||

image: docker.elastic.co/elasticsearch/elasticsearch:7.16.0

|

||||

container_name: es01

|

||||

environment:

|

||||

- node.name=es01

|

||||

- discovery.type=single-node

|

||||

- indices.query.bool.max_clause_count=8192

|

||||

- bootstrap.memory_lock=true

|

||||

- "ES_JAVA_OPTS=-Dlog4j2.formatMsgNoLookups=true -Xms8g -Xmx8g" # no more than 32, remember to disable swap

|

||||

ulimits:

|

||||

memlock:

|

||||

soft: -1

|

||||

hard: -1

|

||||

volumes:

|

||||

- "es01:/usr/share/elasticsearch/data"

|

||||

ports:

|

||||

- "127.0.0.1:9200:9200"

|

||||

|

|

@ -1,7 +0,0 @@

|

|||

#!/bin/bash

|

||||

|

||||

DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null 2>&1 && pwd )"

|

||||

cd "$DIR/../.." ## make sure we're in the right place. Docker Hub screws this up sometimes

|

||||

echo "docker build dir: $(pwd)"

|

||||

|

||||

docker build --build-arg DOCKER_TAG=$DOCKER_TAG --build-arg DOCKER_COMMIT=$SOURCE_COMMIT -f $DOCKERFILE_PATH -t $IMAGE_NAME .

|

||||

|

|

@ -1,17 +0,0 @@

|

|||

#!/bin/bash

|

||||

|

||||

# entrypoint for scribe Docker image

|

||||

|

||||

set -euo pipefail

|

||||

|

||||

if [ -z "$HUB_COMMAND" ]; then

|

||||

echo "HUB_COMMAND env variable must be scribe, scribe-hub, or scribe-elastic-sync"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

case "$HUB_COMMAND" in

|

||||

scribe ) exec /home/lbry/.local/bin/scribe "$@" ;;

|

||||

scribe-hub ) exec /home/lbry/.local/bin/scribe-hub "$@" ;;

|

||||

scribe-elastic-sync ) exec /home/lbry/.local/bin/scribe-elastic-sync ;;

|

||||

* ) "HUB_COMMAND env variable must be scribe, scribe-hub, or scribe-elastic-sync" && exit 1 ;;

|

||||

esac

|

||||

23

docs/blocking.md

Normal file

23

docs/blocking.md

Normal file

|

|

@ -0,0 +1,23 @@

|

|||

### Claim filtering and blocking

|

||||

|

||||

- Filtered claims are removed from claim search results (`blockchain.claimtrie.search`), they can still be resolved (`blockchain.claimtrie.resolve`)

|

||||

- Blocked claims are not included in claim search results and cannot be resolved.

|

||||

|

||||

Claims that are either filtered or blocked are replaced with a corresponding error message that includes the censoring channel id in a result that would return them.

|

||||

|

||||

#### How to filter or block claims:

|

||||

1. Make a channel (using lbry-sdk) and include the claim id of the channel in `--filtering_channel_ids` or `--blocking_channel_ids` used by `scribe-hub` **and** `scribe-elastic-sync`, depending on which you want to use the channel for. To use both blocking and filtering, make one channel for each.

|

||||

2. Using lbry-sdk, repost the claim to be blocked or filtered using your corresponding channel. If you block/filter a claim id for a channel, it will block/filter all of the claims in the channel.

|

||||

|

||||

#### Defaults

|

||||

|

||||

The example docker-composes in the setup guide use the following defaults:

|

||||

|

||||

Filtering:

|

||||

- `lbry://@LBRY-TagAbuse#770bd7ecba84fd2f7607fb15aedd2b172c2e153f`

|

||||

- `lbry://@LBRY-UntaggedPorn#95e5db68a3101df19763f3a5182e4b12ba393ee8`

|

||||

|

||||

Blocking

|

||||

- `lbry://@LBRY-DMCA#dd687b357950f6f271999971f43c785e8067c3a9`

|

||||

- `lbry://@LBRY-DMCARedFlag#06871aa438032244202840ec59a469b303257cad`

|

||||

- `lbry://@LBRY-OtherUSIllegal#b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6`

|

||||

201

docs/cluster_guide.md

Normal file

201

docs/cluster_guide.md

Normal file

|

|

@ -0,0 +1,201 @@

|

|||

## Cluster environment guide

|

||||

|

||||

For best performance the recommended setup uses three server instances, these can be rented VPSs, self hosted VMs (ideally not on one physical host unless the host is sufficiently powerful), or physical computers. One is a dedicated lbcd node, one an elasticsearch server, and the third runs the hub services (scribe, herald, and scribe-elastic-sync). With this configuration the lbcd and elasticsearch servers can be shared between multiple herald servers - more on that later.

|

||||

Server Requirements (space requirements are at least double what's needed so it's possible to copy snapshots into place or make snapshots):

|

||||

- lbcd: 2 cores, 8gb ram (slightly more may be required syncing from scratch, from a snapshot 8 is plenty), 150gb of NVMe storage

|

||||

- elasticsearch: 8 cores, 9gb of ram (8gb minimum given to ES), 150gb of SSD speed storage

|

||||

- hub: 8 cores, 32gb of ram, 200gb of NVMe storage

|

||||

|

||||

All servers are assumed to be running ubuntu 20.04 with user named `lbry` with passwordless sudo and docker group permissions, ssh configured, ulimits set high (in `/etc/security/limits.conf`, also see [this](https://unix.stackexchange.com/questions/366352/etc-security-limits-conf-not-applied/370652#370652) if the ulimit won't apply), and docker + docker-compose installed. The server running elasticsearch should have swap disabled. The three servers need to be able to communicate with each other, they can be on a local network together or communicate over the internet. This guide will assume the three servers are on the internet.

|

||||

|

||||

### Setting up the lbcd instance

|

||||

Log in to the lbcd instance and perform the following steps:

|

||||

- Build the lbcd docker image by running

|

||||

```

|

||||

git clone https://github.com/lbryio/lbcd.git

|

||||

cd lbcd

|

||||

docker build . -t lbry/lbcd:latest

|

||||

```

|

||||

- Copy the following to `~/docker-compose.yml`

|

||||

```

|

||||

version: "3"

|

||||

|

||||

volumes:

|

||||

lbcd:

|

||||

|

||||

services:

|

||||

lbcd:

|

||||

image: lbry/lbcd:latest

|

||||

restart: always

|

||||

network_mode: host

|

||||

command:

|

||||

- "--notls"

|

||||

- "--rpcuser=lbry"

|

||||

- "--rpcpass=lbry"

|

||||

- "--rpclisten=127.0.0.1"

|

||||

volumes:

|

||||

- "lbcd:/root/.lbcd"

|

||||

ports:

|

||||

- "127.0.0.1:9245:9245"

|

||||

- "9246:9246" # p2p port

|

||||

```

|

||||

- Start lbcd by running `docker-compose up -d`

|

||||

- Check the progress with `docker-compose logs -f --tail 100`

|

||||

|

||||

### Setting up the elasticsearch instance

|

||||

Log in to the elasticsearch instance and perform the following steps:

|

||||

- Copy the following to `~/docker-compose.yml`

|

||||

```

|

||||

version: "3"

|

||||

|

||||

volumes:

|

||||

es01:

|

||||

|

||||

services:

|

||||

es01:

|

||||

image: docker.elastic.co/elasticsearch/elasticsearch:7.16.0

|

||||

container_name: es01

|

||||

environment:

|

||||

- node.name=es01

|

||||

- discovery.type=single-node

|

||||

- indices.query.bool.max_clause_count=8192

|

||||

- bootstrap.memory_lock=true

|

||||

- "ES_JAVA_OPTS=-Dlog4j2.formatMsgNoLookups=true -Xms8g -Xmx8g" # no more than 32, remember to disable swap

|

||||

ulimits:

|

||||

memlock:

|

||||

soft: -1

|

||||

hard: -1

|

||||

volumes:

|

||||

- "es01:/usr/share/elasticsearch/data"

|

||||

ports:

|

||||

- "127.0.0.1:9200:9200"

|

||||

```

|

||||

- Start elasticsearch by running `docker-compose up -d`

|

||||

- Check the status with `docker-compose logs -f --tail 100`

|

||||

|

||||

### Setting up the hub instance

|

||||

- Log in (ssh) to the hub instance and generate and print out a ssh key, this is needed to set up port forwards to the other two instances. Copy the output of the following:

|

||||

```

|

||||

ssh-keygen -q -t ed25519 -N '' -f ~/.ssh/id_ed25519 <<<y >/dev/null 2>&1

|

||||

```

|

||||

- After copying the above key, log out of the hub instance.

|

||||

|

||||

- Log in to the elasticsearch instance add the copied key to `~/.ssh/authorized_keys` (see [this](https://stackoverflow.com/questions/6377009/adding-a-public-key-to-ssh-authorized-keys-does-not-log-me-in-automatically) if confused). Log out of the elasticsearch instance once done.

|

||||

- Log in to the lbcd instance and add the copied key to `~/.ssh/authorized_keys`, log out when done.

|

||||

- Log in to the hub instance and copy the following to `/etc/systemd/system/es-tunnel.service`, replacing `lbry` with your user and `your-elastic-ip` with your elasticsearch instance ip.

|

||||

```

|

||||

[Unit]

|

||||

Description=Persistent SSH Tunnel for ES

|

||||

After=network.target

|

||||

|

||||

[Service]

|

||||

Restart=on-failure

|

||||

RestartSec=5

|

||||

ExecStart=/usr/bin/ssh -NTC -o ServerAliveInterval=60 -o ExitOnForwardFailure=yes -L 127.0.0.1:9200:127.0.0.1:9200 lbry@your-elastic-ip

|

||||

User=lbry

|

||||

Group=lbry

|

||||

|

||||

[Install]

|

||||

WantedBy=multi-user.target

|

||||

```

|

||||

- Next, copy the following to `/etc/systemd/system/lbcd-tunnel.service` on the hub instance, replacing `lbry` with your user and `your-lbcd-ip` with your lbcd instance ip.

|

||||

```

|

||||

[Unit]

|

||||

Description=Persistent SSH Tunnel for lbcd

|

||||

After=network.target

|

||||

|

||||

[Service]

|

||||

Restart=on-failure

|

||||

RestartSec=5

|

||||

ExecStart=/usr/bin/ssh -NTC -o ServerAliveInterval=60 -o ExitOnForwardFailure=yes -L 127.0.0.1:9245:127.0.0.1:9245 lbry@your-lbcd-ip

|

||||

User=lbry

|

||||

Group=lbry

|

||||

|

||||

[Install]

|

||||

WantedBy=multi-user.target

|

||||

```

|

||||

- Verify you can ssh in to the elasticsearch and lbcd instances from the hub instance

|

||||

- Enable and start the ssh port forward services on the hub instance

|

||||

```

|

||||

sudo systemctl enable es-tunnel.service

|

||||

sudo systemctl enable lbcd-tunnel.service

|

||||

sudo systemctl start es-tunnel.service

|

||||

sudo systemctl start lbcd-tunnel.service

|

||||

```

|

||||

- Build the hub docker image on the hub instance by running the following:

|

||||

```

|

||||

git clone https://github.com/lbryio/hub.git

|

||||

cd hub

|

||||

docker build -t lbry/hub:development .

|

||||

```

|

||||

- Copy the following to `~/docker-compose.yml` on the hub instance

|

||||

```

|

||||

version: "3"

|

||||

|

||||

volumes:

|

||||

lbry_rocksdb:

|

||||

|

||||

services:

|

||||

scribe:

|

||||

depends_on:

|

||||

- scribe_elastic_sync

|

||||

image: lbry/hub:${SCRIBE_TAG:-master}

|

||||

restart: always

|

||||

network_mode: host

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=scribe

|

||||

- SNAPSHOT_URL=https://snapshots.lbry.com/hub/lbry-rocksdb.zip

|

||||

command:

|

||||

- "--daemon_url=http://lbry:lbry@127.0.0.1:9245"

|

||||

- "--max_query_workers=2"

|

||||

- "--cache_all_tx_hashes"

|

||||

- "--index_address_statuses"

|

||||

scribe_elastic_sync:

|

||||

image: lbry/hub:${SCRIBE_TAG:-master}

|

||||

restart: always

|

||||

network_mode: host

|

||||

ports:

|

||||

- "127.0.0.1:19080:19080" # elastic notifier port

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=scribe-elastic-sync

|

||||

- FILTERING_CHANNEL_IDS=770bd7ecba84fd2f7607fb15aedd2b172c2e153f 95e5db68a3101df19763f3a5182e4b12ba393ee8

|

||||

- BLOCKING_CHANNEL_IDS=dd687b357950f6f271999971f43c785e8067c3a9 06871aa438032244202840ec59a469b303257cad b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6

|

||||

command:

|

||||

- "--elastic_host=127.0.0.1"

|

||||

- "--elastic_port=9200"

|

||||

- "--max_query_workers=2"

|

||||

herald:

|

||||

depends_on:

|

||||

- scribe_elastic_sync

|

||||

- scribe

|

||||

image: lbry/hub:${SCRIBE_TAG:-master}

|

||||

restart: always

|

||||

network_mode: host

|

||||

ports:

|

||||

- "50001:50001" # electrum rpc port and udp ping port

|

||||

- "2112:2112" # comment out to disable prometheus metrics

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=herald

|

||||

- FILTERING_CHANNEL_IDS=770bd7ecba84fd2f7607fb15aedd2b172c2e153f 95e5db68a3101df19763f3a5182e4b12ba393ee8

|

||||

- BLOCKING_CHANNEL_IDS=dd687b357950f6f271999971f43c785e8067c3a9 06871aa438032244202840ec59a469b303257cad b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6

|

||||

command:

|

||||

- "--index_address_statuses"

|

||||

- "--daemon_url=http://lbry:lbry@127.0.0.1:9245"

|

||||

- "--elastic_host=127.0.0.1"

|

||||

- "--elastic_port=9200"

|

||||

- "--max_query_workers=4"

|

||||

- "--host=0.0.0.0"

|

||||

- "--max_sessions=100000"

|

||||

- "--prometheus_port=2112" # comment out to disable prometheus metrics

|

||||

```

|

||||

- Start the hub services by running `docker-compose up -d`

|

||||

- Check the status with `docker-compose logs -f --tail 100`

|

||||

|

||||

### Manual setup of docker volumes from snapshots

|

||||

For an example of copying and configuring permissions for a hub docker volume, see [this](https://github.com/lbryio/hub/blob/master/scripts/initialize_rocksdb_snapshot_dev.sh). For an example for the elasticsearch volume, see [this](https://github.com/lbryio/hub/blob/master/scripts/initialize_es_snapshot_dev.sh). **Read these scripts before running them** to avoid overwriting the wrong volume, they are more of a guide on how to set the permissions and where files go than setup scripts.

|

||||

BIN

docs/diagram.png

Normal file

BIN

docs/diagram.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 103 KiB |

99

docs/docker_examples/docker-compose.yml

Normal file

99

docs/docker_examples/docker-compose.yml

Normal file

|

|

@ -0,0 +1,99 @@

|

|||

version: "3"

|

||||

|

||||

volumes:

|

||||

lbcd:

|

||||

lbry_rocksdb:

|

||||

es01:

|

||||

|

||||

services:

|

||||

scribe:

|

||||

depends_on:

|

||||

- lbcd

|

||||

- scribe_elastic_sync

|

||||

image: lbry/hub:${SCRIBE_TAG:-master}

|

||||

restart: always

|

||||

network_mode: host

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=scribe

|

||||

- SNAPSHOT_URL=https://snapshots.lbry.com/hub/block_1312050/lbry-rocksdb.tar

|

||||

command: # for full options, see `scribe --help`

|

||||

- "--daemon_url=http://lbry:lbry@127.0.0.1:9245"

|

||||

- "--max_query_workers=2"

|

||||

- "--index_address_statuses"

|

||||

scribe_elastic_sync:

|

||||

depends_on:

|

||||

- es01

|

||||

image: lbry/hub:${SCRIBE_TAG:-master}

|

||||

restart: always

|

||||

network_mode: host

|

||||

ports:

|

||||

- "127.0.0.1:19080:19080" # elastic notifier port

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=scribe-elastic-sync

|

||||

- FILTERING_CHANNEL_IDS=770bd7ecba84fd2f7607fb15aedd2b172c2e153f 95e5db68a3101df19763f3a5182e4b12ba393ee8 d4612c256a44fc025c37a875751415299b1f8220

|

||||

- BLOCKING_CHANNEL_IDS=dd687b357950f6f271999971f43c785e8067c3a9 06871aa438032244202840ec59a469b303257cad b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6 145265bd234b7c9c28dfc6857d878cca402dda94 22335fbb132eee86d374b613875bf88bec83492f f665b89b999f411aa5def311bb2eb385778d49c8

|

||||

command: # for full options, see `scribe-elastic-sync --help`

|

||||

- "--max_query_workers=2"

|

||||

- "--elastic_host=127.0.0.1" # elasticsearch host

|

||||

- "--elastic_port=9200" # elasticsearch port

|

||||

herald:

|

||||

depends_on:

|

||||

- lbcd

|

||||

- scribe_elastic_sync

|

||||

- scribe

|

||||

image: lbry/hub:${SCRIBE_TAG:-master}

|

||||

restart: always

|

||||

network_mode: host

|

||||

ports:

|

||||

- "50001:50001" # electrum rpc port and udp ping port

|

||||

- "2112:2112" # comment out to disable prometheus metrics

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=herald

|

||||

- FILTERING_CHANNEL_IDS=770bd7ecba84fd2f7607fb15aedd2b172c2e153f 95e5db68a3101df19763f3a5182e4b12ba393ee8 d4612c256a44fc025c37a875751415299b1f8220

|

||||

- BLOCKING_CHANNEL_IDS=dd687b357950f6f271999971f43c785e8067c3a9 06871aa438032244202840ec59a469b303257cad b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6 145265bd234b7c9c28dfc6857d878cca402dda94 22335fbb132eee86d374b613875bf88bec83492f f665b89b999f411aa5def311bb2eb385778d49c8

|

||||

command: # for full options, see `herald --help`

|

||||

- "--index_address_statuses"

|

||||

- "--daemon_url=http://lbry:lbry@127.0.0.1:9245"

|

||||

- "--max_query_workers=4"

|

||||

- "--host=0.0.0.0"

|

||||

- "--elastic_services=127.0.0.1:9200/127.0.0.1:19080"

|

||||

- "--prometheus_port=2112" # comment out to disable prometheus metrics

|

||||

# - "--max_sessions=100000 # uncomment to increase the maximum number of electrum connections, defaults to 1000

|

||||

# - "--allow_lan_udp" # uncomment to reply to clients on the local network

|

||||

es01:

|

||||

image: docker.elastic.co/elasticsearch/elasticsearch:7.16.0

|

||||

container_name: es01

|

||||

environment:

|

||||

- node.name=es01

|

||||

- discovery.type=single-node

|

||||

- indices.query.bool.max_clause_count=8192

|

||||

- bootstrap.memory_lock=true

|

||||

- "ES_JAVA_OPTS=-Dlog4j2.formatMsgNoLookups=true -Xms8g -Xmx8g" # no more than 32, remember to disable swap

|

||||

ulimits:

|

||||

memlock:

|

||||

soft: -1

|

||||

hard: -1

|

||||

volumes:

|

||||

- "es01:/usr/share/elasticsearch/data"

|

||||

ports:

|

||||

- "127.0.0.1:9200:9200"

|

||||

lbcd:

|

||||

image: lbry/lbcd:latest

|

||||

restart: always

|

||||

network_mode: host

|

||||

command:

|

||||

- "--notls"

|

||||

- "--listen=0.0.0.0:9246"

|

||||

- "--rpclisten=127.0.0.1:9245"

|

||||

- "--rpcuser=lbry"

|

||||

- "--rpcpass=lbry"

|

||||

volumes:

|

||||

- "lbcd:/root/.lbcd"

|

||||

ports:

|

||||

- "9246:9246" # p2p

|

||||

23

docs/docker_examples/elastic-compose.yml

Normal file

23

docs/docker_examples/elastic-compose.yml

Normal file

|

|

@ -0,0 +1,23 @@

|

|||

version: "3"

|

||||

|

||||

volumes:

|

||||

es01:

|

||||

|

||||

services:

|

||||

es01:

|

||||

image: docker.elastic.co/elasticsearch/elasticsearch:7.16.0

|

||||

container_name: es01

|

||||

environment:

|

||||

- node.name=es01

|

||||

- discovery.type=single-node

|

||||

- indices.query.bool.max_clause_count=8192

|

||||

- bootstrap.memory_lock=true

|

||||

- "ES_JAVA_OPTS=-Dlog4j2.formatMsgNoLookups=true -Xms8g -Xmx8g" # no more than 32, remember to disable swap

|

||||

ulimits:

|

||||

memlock:

|

||||

soft: -1

|

||||

hard: -1

|

||||

volumes:

|

||||

- "es01:/usr/share/elasticsearch/data"

|

||||

ports:

|

||||

- "127.0.0.1:9200:9200"

|

||||

59

docs/docker_examples/hub-compose.yml

Normal file

59

docs/docker_examples/hub-compose.yml

Normal file

|

|

@ -0,0 +1,59 @@

|

|||

version: "3"

|

||||

|

||||

volumes:

|

||||

lbry_rocksdb:

|

||||

|

||||

services:

|

||||

scribe:

|

||||

depends_on:

|

||||

- scribe_elastic_sync

|

||||

image: lbry/hub:${SCRIBE_TAG:-master}

|

||||

restart: always

|

||||

network_mode: host

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=scribe

|

||||

- SNAPSHOT_URL=https://snapshots.lbry.com/hub/block_1312050/lbry-rocksdb.tar

|

||||

command:

|

||||

- "--daemon_url=http://lbry:lbry@127.0.0.1:9245"

|

||||

- "--max_query_workers=2"

|

||||

scribe_elastic_sync:

|

||||

image: lbry/hub:${SCRIBE_TAG:-master}

|

||||

restart: always

|

||||

network_mode: host

|

||||

ports:

|

||||

- "127.0.0.1:19080:19080" # elastic notifier port

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=scribe-elastic-sync

|

||||

- FILTERING_CHANNEL_IDS=770bd7ecba84fd2f7607fb15aedd2b172c2e153f 95e5db68a3101df19763f3a5182e4b12ba393ee8 d4612c256a44fc025c37a875751415299b1f8220

|

||||

- BLOCKING_CHANNEL_IDS=dd687b357950f6f271999971f43c785e8067c3a9 06871aa438032244202840ec59a469b303257cad b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6 145265bd234b7c9c28dfc6857d878cca402dda94 22335fbb132eee86d374b613875bf88bec83492f f665b89b999f411aa5def311bb2eb385778d49c8

|

||||

command:

|

||||

- "--elastic_host=127.0.0.1"

|

||||

- "--elastic_port=9200"

|

||||

- "--max_query_workers=2"

|

||||

herald:

|

||||

depends_on:

|

||||

- scribe_elastic_sync

|

||||

- scribe

|

||||

image: lbry/hub:${SCRIBE_TAG:-master}

|

||||

restart: always

|

||||

network_mode: host

|

||||

ports:

|

||||

- "50001:50001" # electrum rpc port and udp ping port

|

||||

- "2112:2112" # comment out to disable prometheus metrics

|

||||

volumes:

|

||||

- "lbry_rocksdb:/database"

|

||||

environment:

|

||||

- HUB_COMMAND=herald

|

||||

- FILTERING_CHANNEL_IDS=770bd7ecba84fd2f7607fb15aedd2b172c2e153f 95e5db68a3101df19763f3a5182e4b12ba393ee8 d4612c256a44fc025c37a875751415299b1f8220

|

||||

- BLOCKING_CHANNEL_IDS=dd687b357950f6f271999971f43c785e8067c3a9 06871aa438032244202840ec59a469b303257cad b4a2528f436eca1bf3bf3e10ff3f98c57bd6c4c6 145265bd234b7c9c28dfc6857d878cca402dda94 22335fbb132eee86d374b613875bf88bec83492f f665b89b999f411aa5def311bb2eb385778d49c8

|

||||

command:

|

||||

- "--daemon_url=http://lbry:lbry@127.0.0.1:9245"

|

||||

- "--elastic_services=127.0.0.1:9200/127.0.0.1:19080"

|

||||

- "--max_query_workers=4"

|

||||

- "--host=0.0.0.0"

|

||||

- "--max_sessions=100000"

|

||||

- "--prometheus_port=2112" # comment out to disable prometheus metrics

|

||||

19

docs/docker_examples/lbcd-compose.yml

Normal file

19

docs/docker_examples/lbcd-compose.yml

Normal file

|

|

@ -0,0 +1,19 @@

|

|||

version: "3"

|

||||

|

||||

volumes:

|

||||

lbcd:

|

||||

|

||||

services:

|

||||

lbcd:

|

||||

image: lbry/lbcd:latest

|

||||

restart: always

|

||||

network_mode: host

|

||||

command:

|

||||

- "--rpcuser=lbry"

|

||||

- "--rpcpass=lbry"

|

||||

- "--rpclisten=127.0.0.1"

|

||||

volumes:

|

||||

- "lbcd:/root/.lbcd"

|

||||

ports:

|

||||

- "127.0.0.1:9245:9245"

|

||||

- "9246:9246" # p2p port

|

||||

1101

hub/common.py

Normal file

1101

hub/common.py

Normal file

File diff suppressed because it is too large

Load diff

1

hub/db/__init__.py

Normal file

1

hub/db/__init__.py

Normal file

|

|

@ -0,0 +1 @@

|

|||

from .db import SecondaryDB

|

||||

|

|

@ -1,7 +1,7 @@

|

|||

import typing

|

||||

import enum

|

||||

from typing import Optional

|

||||

from scribe.error import ResolveCensoredError

|

||||

from hub.error import ResolveCensoredError

|

||||

|

||||

|

||||

@enum.unique

|

||||

|

|

@ -16,7 +16,7 @@ class DB_PREFIXES(enum.Enum):

|

|||

channel_to_claim = b'J'

|

||||

|

||||

claim_short_id_prefix = b'F'

|

||||

effective_amount = b'D'

|

||||

bid_order = b'D'

|

||||

claim_expiration = b'O'

|

||||

|

||||

claim_takeover = b'P'

|

||||

|

|

@ -48,27 +48,15 @@ class DB_PREFIXES(enum.Enum):

|

|||

touched_hashX = b'e'

|

||||

hashX_status = b'f'

|

||||

hashX_mempool_status = b'g'

|

||||

reposted_count = b'j'

|

||||

effective_amount = b'i'

|

||||

future_effective_amount = b'k'

|

||||

hashX_history_hash = b'l'

|

||||

|

||||

|

||||

COLUMN_SETTINGS = {} # this is updated by the PrefixRow metaclass

|

||||

|

||||

|

||||

CLAIM_TYPES = {

|

||||

'stream': 1,

|

||||

'channel': 2,

|

||||

'repost': 3,

|

||||

'collection': 4,

|

||||

}

|

||||

|

||||

STREAM_TYPES = {

|

||||

'video': 1,

|

||||

'audio': 2,

|

||||

'image': 3,

|

||||

'document': 4,

|

||||

'binary': 5,

|

||||

'model': 6,

|

||||

}

|

||||

|

||||

# 9/21/2020

|

||||

MOST_USED_TAGS = {

|

||||

"gaming",

|

||||

1432

hub/db/db.py

Normal file

1432

hub/db/db.py

Normal file

File diff suppressed because it is too large

Load diff

|

|

@ -1,9 +1,10 @@

|

|||

import asyncio

|

||||

import struct

|

||||

import typing

|

||||

import rocksdb

|

||||

from typing import Optional

|

||||

from scribe.db.common import DB_PREFIXES, COLUMN_SETTINGS

|

||||

from scribe.db.revertable import RevertableOpStack, RevertablePut, RevertableDelete

|

||||

from hub.db.common import DB_PREFIXES, COLUMN_SETTINGS

|

||||

from hub.db.revertable import RevertableOpStack, RevertablePut, RevertableDelete

|

||||

|

||||

|

||||

ROW_TYPES = {}

|

||||

|

|

@ -88,6 +89,12 @@ class PrefixRow(metaclass=PrefixRowType):

|

|||

if v:

|

||||

return v if not deserialize_value else self.unpack_value(v)

|

||||

|

||||

def key_exists(self, *key_args):

|

||||

key_may_exist, _ = self._db.key_may_exist((self._column_family, self.pack_key(*key_args)))

|

||||

if not key_may_exist:

|

||||

return False

|

||||

return self._db.get((self._column_family, self.pack_key(*key_args)), fill_cache=True) is not None

|

||||

|

||||

def multi_get(self, key_args: typing.List[typing.Tuple], fill_cache=True, deserialize_value=True):

|

||||

packed_keys = {tuple(args): self.pack_key(*args) for args in key_args}

|

||||

db_result = self._db.multi_get([(self._column_family, packed_keys[tuple(args)]) for args in key_args],

|

||||

|

|

@ -101,23 +108,44 @@ class PrefixRow(metaclass=PrefixRowType):

|

|||

handle_value(result[packed_keys[tuple(k_args)]]) for k_args in key_args

|

||||

]

|

||||

|

||||

async def multi_get_async_gen(self, executor, key_args: typing.List[typing.Tuple], deserialize_value=True, step=1000):

|

||||

packed_keys = {self.pack_key(*args): args for args in key_args}

|

||||

assert len(packed_keys) == len(key_args), 'duplicate partial keys given to multi_get_dict'

|

||||

db_result = await asyncio.get_event_loop().run_in_executor(

|

||||

executor, self._db.multi_get, [(self._column_family, key) for key in packed_keys]

|

||||

)

|

||||

unpack_value = self.unpack_value

|

||||

|

||||

def handle_value(v):

|

||||

return None if v is None else v if not deserialize_value else unpack_value(v)

|

||||

|

||||

for idx, (k, v) in enumerate((db_result or {}).items()):

|

||||

yield (packed_keys[k[-1]], handle_value(v))

|

||||

if idx % step == 0:

|

||||

await asyncio.sleep(0)

|

||||

|

||||

def stash_multi_put(self, items):

|

||||

self._op_stack.stash_ops([RevertablePut(self.pack_key(*k), self.pack_value(*v)) for k, v in items])

|

||||

|

||||

def stash_multi_delete(self, items):

|

||||

self._op_stack.stash_ops([RevertableDelete(self.pack_key(*k), self.pack_value(*v)) for k, v in items])

|

||||

|

||||

def get_pending(self, *key_args, fill_cache=True, deserialize_value=True):

|

||||

packed_key = self.pack_key(*key_args)

|

||||

last_op = self._op_stack.get_last_op_for_key(packed_key)

|

||||

if last_op:

|

||||

if last_op.is_put:

|

||||

return last_op.value if not deserialize_value else self.unpack_value(last_op.value)

|

||||

else: # it's a delete

|

||||

return

|

||||

v = self._db.get((self._column_family, packed_key), fill_cache=fill_cache)

|

||||

if v:

|

||||

return v if not deserialize_value else self.unpack_value(v)

|

||||

pending_op = self._op_stack.get_pending_op(packed_key)

|

||||

if pending_op and pending_op.is_delete:

|

||||

return

|

||||

if pending_op:

|

||||

v = pending_op.value

|

||||

else:

|

||||

v = self._db.get((self._column_family, packed_key), fill_cache=fill_cache)

|

||||

return None if v is None else (v if not deserialize_value else self.unpack_value(v))

|

||||

|

||||

def stage_put(self, key_args=(), value_args=()):

|

||||

self._op_stack.append_op(RevertablePut(self.pack_key(*key_args), self.pack_value(*value_args)))

|

||||

def stash_put(self, key_args=(), value_args=()):

|

||||

self._op_stack.stash_ops([RevertablePut(self.pack_key(*key_args), self.pack_value(*value_args))])

|

||||

|

||||

def stage_delete(self, key_args=(), value_args=()):

|

||||

self._op_stack.append_op(RevertableDelete(self.pack_key(*key_args), self.pack_value(*value_args)))

|

||||

def stash_delete(self, key_args=(), value_args=()):

|

||||

self._op_stack.stash_ops([RevertableDelete(self.pack_key(*key_args), self.pack_value(*value_args))])

|

||||

|

||||

@classmethod

|

||||

def pack_partial_key(cls, *args) -> bytes:

|

||||

|

|

@ -155,13 +183,14 @@ class BasePrefixDB:

|

|||

UNDO_KEY_STRUCT = struct.Struct(b'>Q32s')

|

||||

PARTIAL_UNDO_KEY_STRUCT = struct.Struct(b'>Q')

|

||||

|

||||

def __init__(self, path, max_open_files=64, secondary_path='', max_undo_depth: int = 200, unsafe_prefixes=None):

|

||||

def __init__(self, path, max_open_files=64, secondary_path='', max_undo_depth: int = 200, unsafe_prefixes=None,

|

||||

enforce_integrity=True):

|

||||

column_family_options = {}

|

||||

for prefix in DB_PREFIXES:

|

||||

settings = COLUMN_SETTINGS[prefix.value]

|

||||

column_family_options[prefix.value] = rocksdb.ColumnFamilyOptions()

|

||||

column_family_options[prefix.value].table_factory = rocksdb.BlockBasedTableFactory(

|

||||

block_cache=rocksdb.LRUCache(settings['cache_size']),

|

||||

block_cache=rocksdb.LRUCache(settings['cache_size'])

|

||||

)

|

||||

self.column_families: typing.Dict[bytes, 'rocksdb.ColumnFamilyHandle'] = {}

|

||||

options = rocksdb.Options(

|

||||

|

|

@ -178,7 +207,9 @@ class BasePrefixDB:

|

|||

cf = self._db.get_column_family(prefix.value)

|

||||

self.column_families[prefix.value] = cf

|

||||

|

||||

self._op_stack = RevertableOpStack(self.get, unsafe_prefixes=unsafe_prefixes)

|

||||

self._op_stack = RevertableOpStack(

|

||||

self.get, self.multi_get, unsafe_prefixes=unsafe_prefixes, enforce_integrity=enforce_integrity

|

||||

)

|

||||

self._max_undo_depth = max_undo_depth

|

||||

|

||||

def unsafe_commit(self):

|

||||

|

|

@ -186,6 +217,7 @@ class BasePrefixDB:

|

|||

Write staged changes to the database without keeping undo information

|

||||

Changes written cannot be undone

|

||||

"""

|

||||

self.apply_stash()

|

||||

try:

|

||||

if not len(self._op_stack):

|

||||

return

|

||||

|

|

@ -206,6 +238,7 @@ class BasePrefixDB:

|

|||

"""

|

||||

Write changes for a block height to the database and keep undo information so that the changes can be reverted

|

||||

"""

|

||||

self.apply_stash()

|

||||

undo_ops = self._op_stack.get_undo_ops()

|

||||

delete_undos = []

|

||||

if height > self._max_undo_depth:

|

||||

|

|

@ -240,6 +273,7 @@ class BasePrefixDB:

|

|||

undo_c_f = self.column_families[DB_PREFIXES.undo.value]

|

||||

undo_info = self._db.get((undo_c_f, undo_key))

|

||||

self._op_stack.apply_packed_undo_ops(undo_info)

|

||||

self._op_stack.validate_and_apply_stashed_ops()

|

||||

try:

|

||||

with self._db.write_batch(sync=True) as batch:

|

||||

batch_put = batch.put

|

||||

|

|

@ -255,10 +289,26 @@ class BasePrefixDB:

|

|||

finally:

|

||||

self._op_stack.clear()

|

||||

|

||||

def apply_stash(self):

|

||||

self._op_stack.validate_and_apply_stashed_ops()

|

||||

|

||||

def get(self, key: bytes, fill_cache: bool = True) -> Optional[bytes]:

|

||||

cf = self.column_families[key[:1]]

|

||||

return self._db.get((cf, key), fill_cache=fill_cache)

|

||||

|

||||

def multi_get(self, keys: typing.List[bytes], fill_cache=True):

|

||||

if len(keys) == 0:

|

||||

return []

|

||||

get_cf = self.column_families.__getitem__

|

||||

db_result = self._db.multi_get([(get_cf(k[:1]), k) for k in keys], fill_cache=fill_cache)

|

||||

return list(db_result.values())

|

||||

|

||||

def multi_delete(self, items: typing.List[typing.Tuple[bytes, bytes]]):

|

||||

self._op_stack.stash_ops([RevertableDelete(k, v) for k, v in items])

|

||||

|

||||

def multi_put(self, items: typing.List[typing.Tuple[bytes, bytes]]):

|

||||

self._op_stack.stash_ops([RevertablePut(k, v) for k, v in items])

|

||||

|

||||

def iterator(self, start: bytes, column_family: 'rocksdb.ColumnFamilyHandle' = None,

|

||||

iterate_lower_bound: bytes = None, iterate_upper_bound: bytes = None,

|

||||

reverse: bool = False, include_key: bool = True, include_value: bool = True,

|

||||

|

|

@ -276,11 +326,11 @@ class BasePrefixDB:

|

|||

def try_catch_up_with_primary(self):

|

||||

self._db.try_catch_up_with_primary()

|

||||

|

||||

def stage_raw_put(self, key: bytes, value: bytes):

|

||||

self._op_stack.append_op(RevertablePut(key, value))

|

||||

def stash_raw_put(self, key: bytes, value: bytes):

|

||||

self._op_stack.stash_ops([RevertablePut(key, value)])

|

||||

|

||||

def stage_raw_delete(self, key: bytes, value: bytes):

|

||||

self._op_stack.append_op(RevertableDelete(key, value))

|

||||

def stash_raw_delete(self, key: bytes, value: bytes):

|

||||

self._op_stack.stash_ops([RevertableDelete(key, value)])

|

||||

|

||||

def estimate_num_keys(self, column_family: 'rocksdb.ColumnFamilyHandle' = None):

|

||||

return int(self._db.get_property(b'rocksdb.estimate-num-keys', column_family).decode())

|

||||

|

|

@ -29,7 +29,7 @@ import typing

|

|||

from asyncio import Event

|

||||

from math import ceil, log

|

||||

|

||||

from scribe.common import double_sha256

|

||||

from hub.common import double_sha256

|

||||

|

||||

|

||||

class Merkle:

|

||||

67

hub/db/migrators/migrate10to11.py

Normal file

67

hub/db/migrators/migrate10to11.py

Normal file

|

|

@ -0,0 +1,67 @@

|

|||

import logging

|

||||

from collections import defaultdict

|

||||

from hub.db.prefixes import ACTIVATED_SUPPORT_TXO_TYPE

|

||||

|

||||

FROM_VERSION = 10

|

||||

TO_VERSION = 11

|

||||

|

||||

|

||||

def migrate(db):

|

||||

log = logging.getLogger(__name__)

|

||||

prefix_db = db.prefix_db

|

||||

|

||||

log.info("migrating the db to version 11")

|

||||

|

||||

effective_amounts = defaultdict(int)

|